In a Herald (Daily Telegraph) story this week

When confronted, he confessed he’d been having an affair with a single mother he met at the school gates.

“She was vulnerable,” says Janet. “I guess he liked that. It made him feel like a hero.”

Her experience sadly chimes with the findings of a new study of more than 2,750 young married people by the University of Connecticut, which showed that men who are financially dependent on their spouses are the most likely to be unfaithful. In fact, the bigger the earning gap, the more likely they are to have an affair, with those who rely solely on their wives for their income the biggest cheats.

It turns out there are things wrong with this story, even restricting to statistical issues. To find them, you’d need to look at the research paper, which you probably can’t, because it’s paywalled. I should note that these aren’t necessarily all criticisms of the paper for the question it was answering (which was about motivations for fidelity/infidelity). but they are for how it has been used — to turn a couple of anecdotes into a Deeply Worrying Social Trend.

First, the income data. The researcher writes

I calculated the measure from respondents’ and their spouse’s total income earned during the previous year from wages, salaries, commissions, and tips. I excluded self-employment income, because the division between labor income and business income is often measured with substantial error.

That means the group described as ‘rely solely on their wives for income‘ includes all the self-employed men, no matter how much they earn. There may well be more of them than voluntarily unemployed house-husbands.

Second, a somewhat technical point, which I think I last covered in 2012, with two posts on a story about mid-life crisis in chimpanzees.

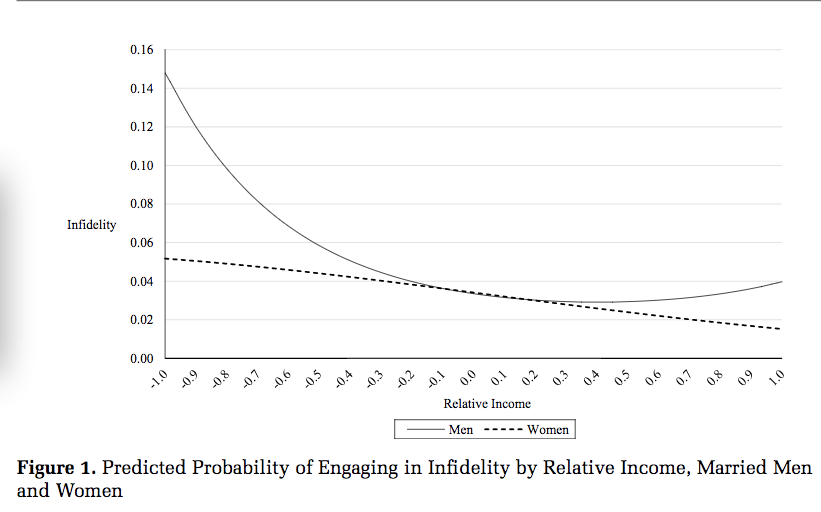

Here’s a summary of the model given in the research paper

Notice how the curved line for men bends away from the straight line for women on both sides? And that the deviation from a straight line looks pretty symmetric? That’s forced by the statistical model.

Conceptually, the left and right sides of this graph show quite different phenomena. The right side says that the decrease in infidelity with higher relative income flattens out in men, but not in women. The left side says that the increase with lower relative income accelerates in men. The model forces these to be the same, and uses the same data to estimate both.

Since there are more men with positive than negative relative income, most of the actual evidence for men is on the right-hand side, but the newspaper story is looking at the left-hand side.

Recent comments