They say the neon lights are bright

The Herald (on Twitter, today)

An international study has revealed why Auckland has been ranked low for liveability compared to other cities in New Zealand and the rest of the world.

Stuff (March last year)

The City of Sails continues to be ranked the world’s third most liveable city for quality of life.

Phil Goff, as Mayor of Auckland, obviously prefers the pro-Auckland survey and the Herald said he ‘rubbished’ the other survey, saying it “defies all the evidence which shows Auckland is a growing, highly popular city for all people.”

There’s no need for one survey to be wrong and the other one right, though. It depends on what you’re looking for. Mercer’s rankings (where Auckland does well) are aimed at companies moving employees to overseas postings. One of their intended uses is to work out how much extra executives need to be paid in compensation for living in, say, Houston or Birmingham rather than Auckland or Vancouver. Cost of living doesn’t factor into this; it’s quality of life if you’re rich enough.

Some of the quality of life features (good climate, safe streets, lack of air pollution) can be enjoyed by most people, but some really are more relevant if you’ve got money.

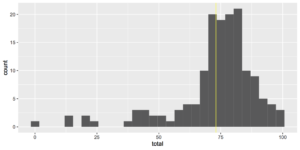

The Movinga ranking that the Herald quotes is about suitability for families. Two important components are housing costs, and total cost of living, in terms of local incomes. Auckland does fairly badly there, and also on a public transport/road congestion component. The ranking of 94 out of 150 overstates the issue a bit: here’s the distribution of scores, with Auckland in yellow. Auckland is part of the main clump, where the rankings will be sensitive to exactly how each component is weighted.

There are important reasons to want to live in a particular city that neither ranking considers. An obvious one is employment or business opportunities. If your speciality is teaching statistics or installing HVAC in tall buildings or running a Shanxi restaurant, you’ll probably do better in Auckland than in New Plymouth. Movinga also rates cities on suitability for entrepreneurs, jobseekers, and as places to find love (Auckland is 34th out of 100 on that last one).

Recent comments