Unofficially over arithmetic

From the Herald (from the Washington Post), under the headline “Teens are officially over Facebook” (yes, officially)

Now, a pretty dramatic new report out from Piper Jaffray – an investment bank with a sizable research arm – rules that the kids are over Facebook once and for all, having fled Mark Zuckerberg’s parent-flooded shores for the more forgiving embraces of Twitter and Instagram.

This is based on a survey by Piper Jaffray, of 7200 people aged 13-19, (in the US, though the Herald doesn’t say that).

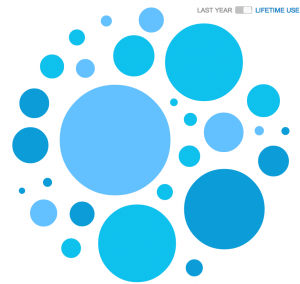

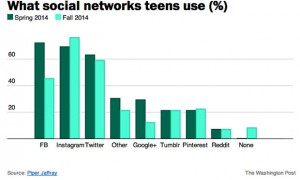

It looks as though US teens are leaving Facebook, but they sure aren’t flocking to Twitter, or, really, to Instagram. If you go to a story that gives the numbers, you see that reported Facebook use has fallen 27 percentage points. Instagram has risen only 7 percentage points, and Twitter has fallen by 4.

So, where are they going? They aren’t giving up on social media entirely — although “None” category wasn’t asked the first time around, it’s only 8 percent in the second survey. It’s possible that teens are cutting down on the number of social media networks they use, but it seems more likely that the question was badly designed. Even I can think of at least one major site that isn’t on the list, Snapchat, which globalwebindex thinks is used by 42% of US internet-connected 16-19 year olds.

Incidentally: those little blue letters that look like they should be a link? They aren’t on the Herald site either, and on the Washington Post site they link to a message that basically says “no, not for you.”