I complain a lot about the publicity-based surveys of varying quality that make it into the NZ media, but there’s a lot more that gets filtered out.

A journalist (who I’m not sure if I should name) sent me an example from Mitre 10

The research surveyed more than 1,500 New Zealanders on their connection to the quarter-acre dream and asked their opinions on the size of back yards and what they were doing to make the most of them.

An overwhelming 84 per cent of respondents agreed that they liked the idea of the traditional Kiwi quarter-acre paradise – a large plot of land with a standalone house on it, with plenty of room outdoors, and almost all said they would rather live on the traditional quarter-acre section than in high-density housing with reduced outdoor living spaces.

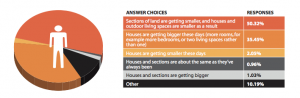

Over half of respondents felt that their outdoor living space is smaller now than what they had growing up (53%). Fifty percent of respondents attributed this to sections of land getting smaller, while 35 per cent believe houses are getting bigger, so there’s less room on a section for an outdoor living space.

The press release is a well-crafted example, with supporting evidence from QV that house sizes are increasing and quotes from a Massey University researcher — not about the survey, but about the general topic.

The survey, on the other hand, was fairly bogus. It was online, and most of the respondents got there through the Mitre 10 Facebook page. You’d expect (and the Mitre 10 CEO has said) that the Facebook page attracts Mitre 10 customers, not necessarily a representative sample. The report confirms this, with 88% of respondents being born in NZ, compared to about 75% of the population as a whole.

To make matters worse, here’s the reported data for the paragraphs quoted above. “Houses are bigger” and “sections are smaller” were alternative responses to the same question. You couldn’t answer that both were true — the correct answer, and the position that the report itself is pushing.

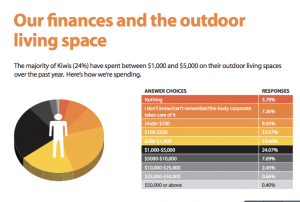

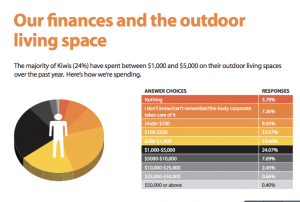

One more finding I can’t resist quoting: “The majority of Kiwis (24%) have spent between $1,000 and $5,000 on their outdoor living spaces over the past year. “