Who’s afraid of the NSA?

Two tweets in my time line this morning linked to this report about this research paper, saying “americans have stopped searching on forbidden words“

That’s a wild exaggeration, but what the research found was interesting. They looked at Google Trends search data for words and phrases that might be privacy-related in various ways: for example, searches that might be of interest to the US government security apparat or searchers that might be embarrassing if a friend knew about them.

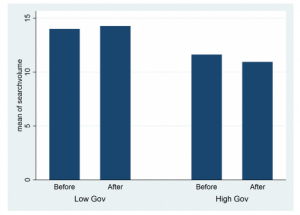

In the US (but not in other countries) there was a small but definite change in searches at around the time of Edward Snowden’s NSA revelations. Search volume in general kept increasing, but searches on words that might be of interest to the government decreased slightly

The data suggest that some people in the US became concerned that the NSA might care about them, and given that there presumably aren’t enough terrorists in the US to explain the difference, that knowing about the NSA surveillance is having an effect on political behaviour of (a subset of) ordinary Americans.

There is a complication, though. A similar fall was seen in the other categories of privacy-sensitive data, so either the real answer is something different, or people are worried about the NSA seeing their searches for porn.