The light at the end of the tunnel

It’s the end of another semester, and we’re about to have a couple of days of presentations by our BSc(Hons) and MSc students, telling us what they’ve been doing all year:

Improving staffing schedules at a Cardiothoracic Intensive Care Unit

Clickers: A study of student opinion on audience response technology

Population modelling interactions between introduced and threatened species for conservation management

Deal or No Deal

From question to design: Creating a guide for experimental planning and design in the biological sciences

Balanced Incomplete Block Design in Multivariate Analysis

Use of multivariate omnibus test with mixed model analysis on heterogeneous nested data

Generalised Estimating Equations (GEEs) in the multivariate omnibus test

Web-based interactive graphics

Interactive Graphics for Data Quality Assessment

Creating an R meta-analysis graphics package

Monte Carlo Methods for Adjusting Confidence Intervals for Parameter of Point Process Models

Investigating if follow-up at outpatient clinics helps prevent adverse patient outcomes from Bowel Resection and Hip Replacement

Methods of analysing hospital length of stay

Data management for combining data sets and macro simulation

Bootstrap methods in linear regression

Comparison of volatility estimates in Black-Scholes option pricing

Financial planning for retirees

A diagnostic for the Gaussian copula

Model Selection under Complex Sampling

BART vs Logistic regression: Propensity score estimation

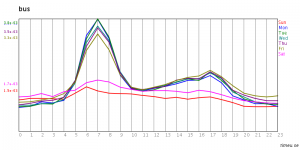

Modelling and Prediction of Electricity Consumption

Brand attribute importance using choice elimination

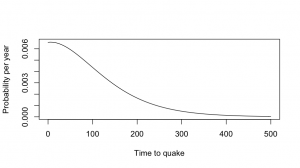

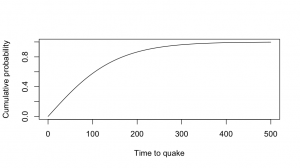

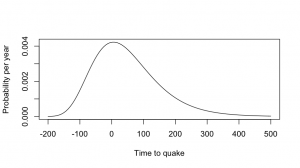

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.

This is a simple and reasonable model for time intervals, and it also has the virtue of giving the same answers that the researchers gave to the press. Using the estimates of mean and variation in the paper, the distribution of times to the next big quake looks like the first graph. The quake is relatively predictable, but “relatively” in this sense means “give or take a century”.