Measurement error and rare events

Surveys are not perfect: some people misunderstand the question, some people recall incorrectly, some responses are written down incorrectly by the poller, and some people just lie. These biases happen in both directions, but their impact is not symmetrical.

Suppose you had a survey that asked “Have you ever been abducted by aliens?” We can be sure that false ‘Yes’ results will be more common than false ‘No’ results, so the survey will necessarily overestimate the true proportion. If you wrote down the wrong answer for 1% of people, you’d end up with an estimate that was 1% too high.

In principle, the same issue could be a serious problem in estimating the support for minor parties: about 1% of people voted for ACT at the last election, and 99% didn’t. Suppose you poll 10000 people and ask them if they voted for ACT, and suppose that 100 of them really were ACT voters. If your opinion poll gets the wrong answer, randomly, for 1% of people, you will get the wrong answer from 1 of the true ACT voters, and 99 of the true non-ACT voters, so you will report 100+99-1=198 ACT voters and 9900+1-99 = 9802 non-ACT voters. You would overestimate the votes for ACT by a factor of two! Keith Humphreys, who we have linked to before, postulates that this is why US polls indicating support for a third-party candidate tend to seriously overestimate their support.

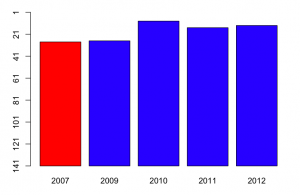

I’m skeptical. Here in NZ, where we really have minor parties, there is no systematic tendency to overestimate the support they receive. ACT got 1% of the vote, and that was close to what the polls predicted. Similarly, the Maori Party, and the Greens received about the same number of votes in the last election as averages of the polls had predicted. For NZ First, the election vote was actually higher than in the opinion polls. Similarly, for the Dutch general election in 2010 there was pretty good agreement between the last polls and the election results. Even in Australia, where there is effectively a two-party system in the lower house (but with preferential voting), the opinion poll figures for the Greens agreed pretty well with the actual vote

It’s true that measurement error tends to bias towards 50%, and this matters in some surveys, but I would have guessed the US phantom third party support is the result of bias, not error. That is, I suspect people tend to overstate their support for third-party candidates in advance of the election, and that in the actual election they vote strategically for whichever of the major parties they dislike least. My hypothesis would imply not much bias in countries where minor-party votes matter, and more bias in countries with first-past-the-post voting. Unfortunately there’s also a pure measurement error hypothesis that’s consistent with the data, which is that people are just more careful about measuring minor-party votes in countries where they matter.