Prompted by a comment from Cosma Shalizi (who, irritatingly, is right as usual), I tried some simulated data on the great ape midlife crisis, and I’m now even less impressed with the paper.

There’s very strong evidence in the paper that the youngest apes are rated as happier by their handlers, and that the relationship with age is not linear. What’s less clear is that there is a U-shape.

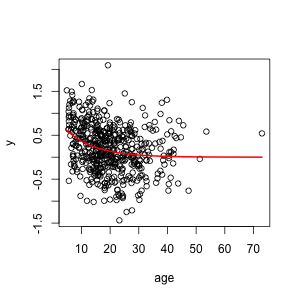

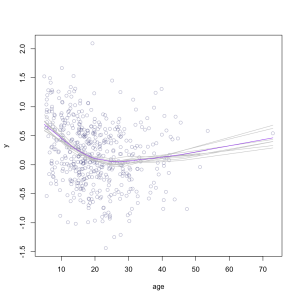

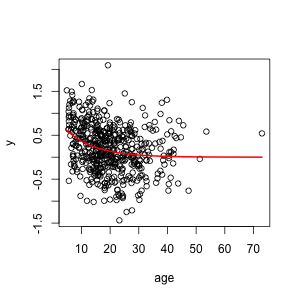

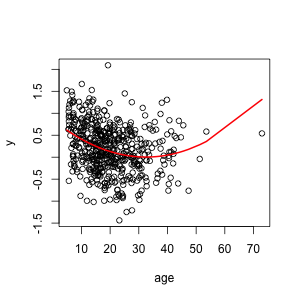

I simulated data where the score decreased sharply at young ages and then flattened out, but didn’t go up again in old age, and analysed as the researchers did in the paper. This is what the data and true relationship look like:

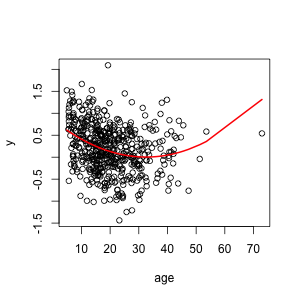

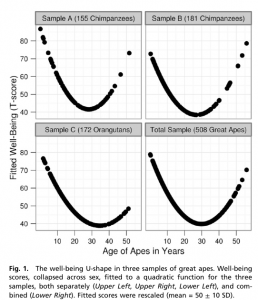

Fitting the model used in the research paper gives a U-shape, because the model they fitted can only give U-shapes. As in the paper, the minimum is in middle life. The statistical significance for the non-linear term is better than in the paper.

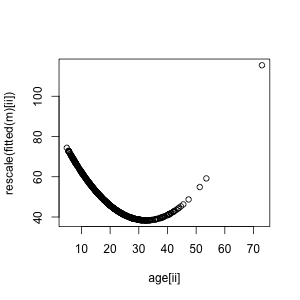

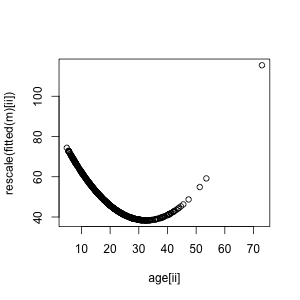

In the paper, the fitted U-shape was rescaled to have mean 50 and standard deviation 10, and the raw data weren’t displayed, making the relationship look much stronger:

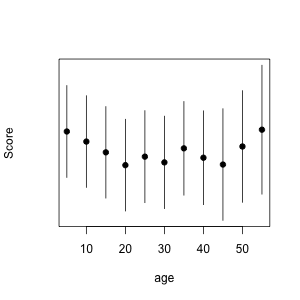

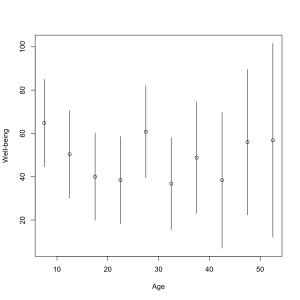

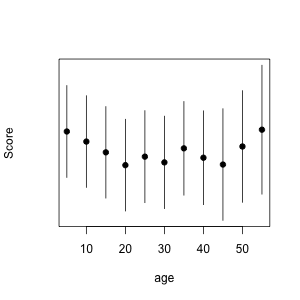

And, as in the paper, the banded model in the Appendix is at least consistent with a U-shape.

So, you can get the results in the paper with no midlife crisis at all. Now, you don’t necessarily get results like these: if you run the code with different sets of random numbers you get results this good maybe half the time. And of course, you could also get those results with a true U-shape.

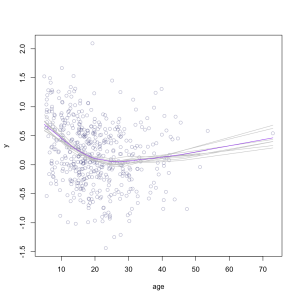

The point is that the results in the paper are not strong evidence for a U-shape, and the graphs and tables in the paper give the impression of much stronger evidence than they actually contain. A much better graph would use a scatterplot smoother, to draw a curve through the data objectively, and something like bootstrap replicates of the curve to give a real impression of uncertainty. This doesn’t give a formal test, but at least it shows what the data are saying.

It would take some thought to come up with a good formal test, but a graph like this one should be a minimum threshold. If there really is evidence of a midlife crisis in apes this graph would show it, and if there isn’t, it wouldn’t. (more…)