August 16, 2021

Briefly

- Siouxsie Wiles in Stuff today with a piece about different study designs in medical research and the hierarchy of evidence. Nice to see this from a lab scientist!

- Censuses: the data releases from the 2020 US Census showing changes in population and in race/ethnic composition: Washington Post, NY Times, LA Times

- Censuses: Australia is holding its census at the moment, which is not going to be good for data on commuting, but will be good for data on the pandemic

- Censuses: Australia has a very good statistical standard for sex and gender questions, but is strangely not using it for the census.

- It appears that machine learning systems can distinguish self-reported racial identity from x-ray images. People can’t, and it’s weird that it’s even possible. Blog post, twitter thread, research preprint. I’m not sure I believe this, but the research seems to have been fairly careful.

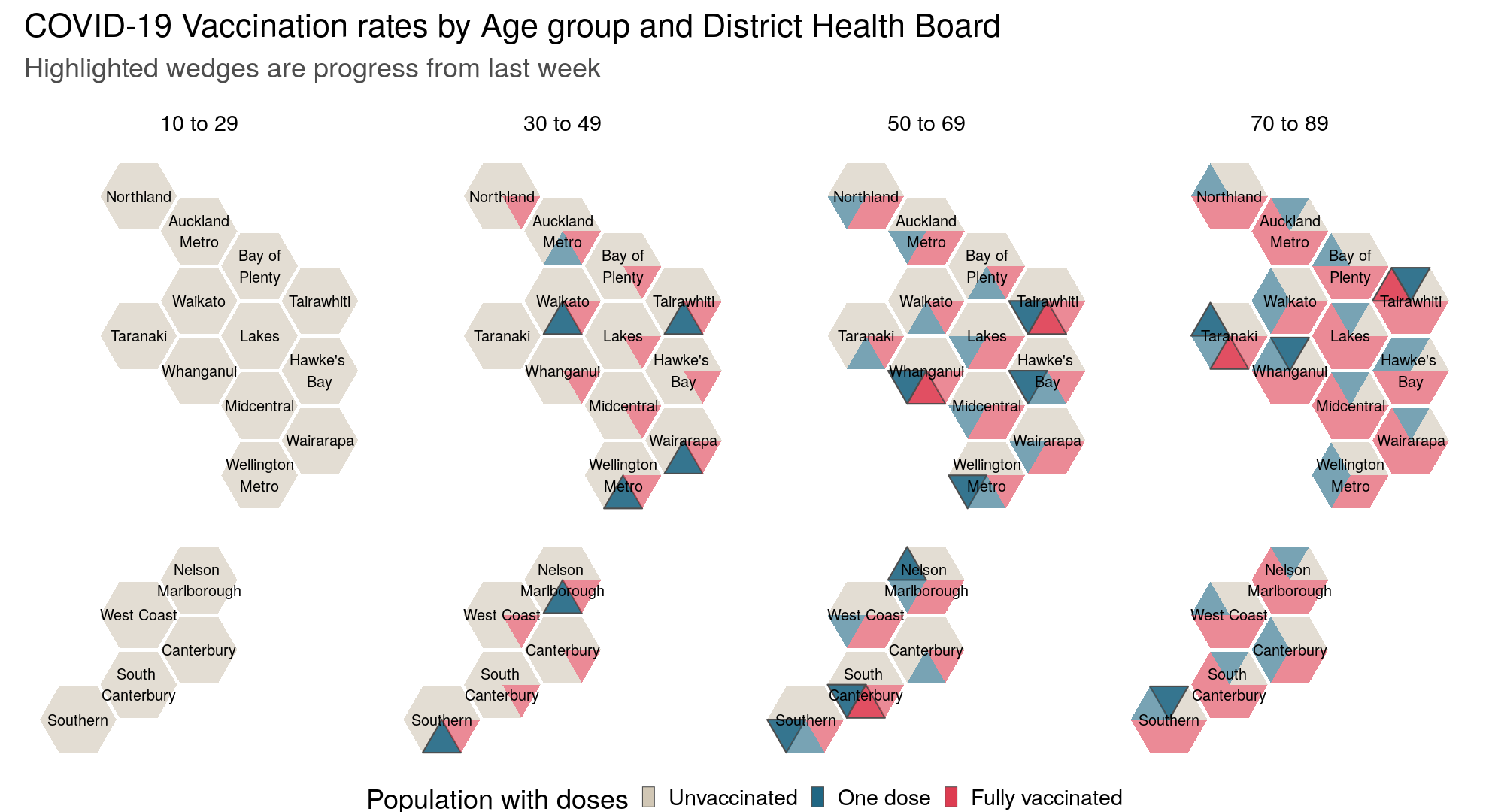

- Jonathan Marshall is doing graphs of vaccination in NZ. These show the data rounded to the nearest sixth of the population — having all the wedges filled means at least 92%ish vaccinated.

Thomas Lumley (@tslumley) is Professor of Biostatistics at the University of Auckland. His research interests include semiparametric models, survey sampling, statistical computing, foundations of statistics, and whatever methodological problems his medical collaborators come up with. He also blogs at Biased and Inefficient See all posts by Thomas Lumley »

On the x-ray AI bit: any chance that the machines used at different centres provide different imaging, and that if there’s some self-identified racial sorting in who goes to which centres, the AI could be picking up on the type of image? Like “Well, this looks like a picture from an Acme X-1 unit, and previous images from an Acme X-1 have all been from India…”

4 years ago

No, the accuracy assessment was on data from different centres from the model training, and with a white/black/other mix in each dataset. Also, they looked at whether any particular part of the image was more important (which would pick up at least some machine artifacts) and didn’t see anything helpful.

4 years ago

I wonder if it’s colour that the AI is picking up? Plots with no features are able to get ethnicity right. The only information left in the plot appears to be colour. Difference in calcium intake might be producing colour differences – Asian’s are more likely to be lactose intolerant than people of European ancestry. That may lead to lower calcium density in their bones and mean their bones look more speckled on xray.

4 years ago