From Radio NZ (and ODT)

Analysis by the consultancy firm Dot Loves Data shows that over a five-year period the rate of assaults in Wellington was 10 times higher than the national average.

It considered all reported crimes over a five-year period, and found in the capital there were 2056 counts of assault and 176 counts of sexual assault reported.

It’s pretty clear that Wellington is not going to have a rate of assaults 10 times higher than the national average in any very useful sense. Unfortunately, I don’t have the report that Dot loves Data are said to have published, and it’s not clear from the story exactly what comparisons they did, so I’ll have to do this the hard way. The advantage is that you can see what I did, and also see some of the limitations in the data.

Crime data can be found on policedata.nz. I looked at ‘Victimisations by Time and Place: Trends’, ie, people who reported getting crimed, regardless of whether the offender was caught and prosecuted, for the five years ending 2020-8-31 (since that’s the end of the data) and where the place of the assault was reported. Having the place reported is a surprising strong restriction: in the last 12 months about 60% of the assaults aren’t assigned to a location* for confidentiality reasons, because they occurred in dwellings. The lack of location data is actually a problem when the point of the story is to compare locations, but let’s pass over it for now and just note that we’re comparing assaults occurring in public rather than all assaults.

For the category “Acts intended to cause injury” there were 91472 in New Zealand, 4337 in Wellington City, and 10303 in Wellington Region. The 91472 acts intended to cause injury that happened in public were about 50,000 ‘common assault’, 17,000 ‘serious assault resulting in injury’ and 23,000 ‘serious assault not resulting in injury’. These aren’t the names of NZ crimes, because (a) they are standardised official-statistics categories and (b) if no-one is arrested or prosecuted it’s a bit hard to be precise about what crime a court would find had occurred. The ‘intended to cause injury’ categories don’t include sexual assault, which is in a separate group and is left as an exercise for the reader. My figures for assault don’t accurately match the reported ones, but they probably aren’t exactly the same time frame and might be different in other ways.

The population of New Zealand is about 4.9 million, of the Wellington Region is about 500,000, and of Wellington City is about 200,000. Wellington City has about a 9% higher rate per capita of assault victimisation than the country as a whole. Not ten times, 1.09 times. Wellington Region is about 4% higher than the country as a whole.

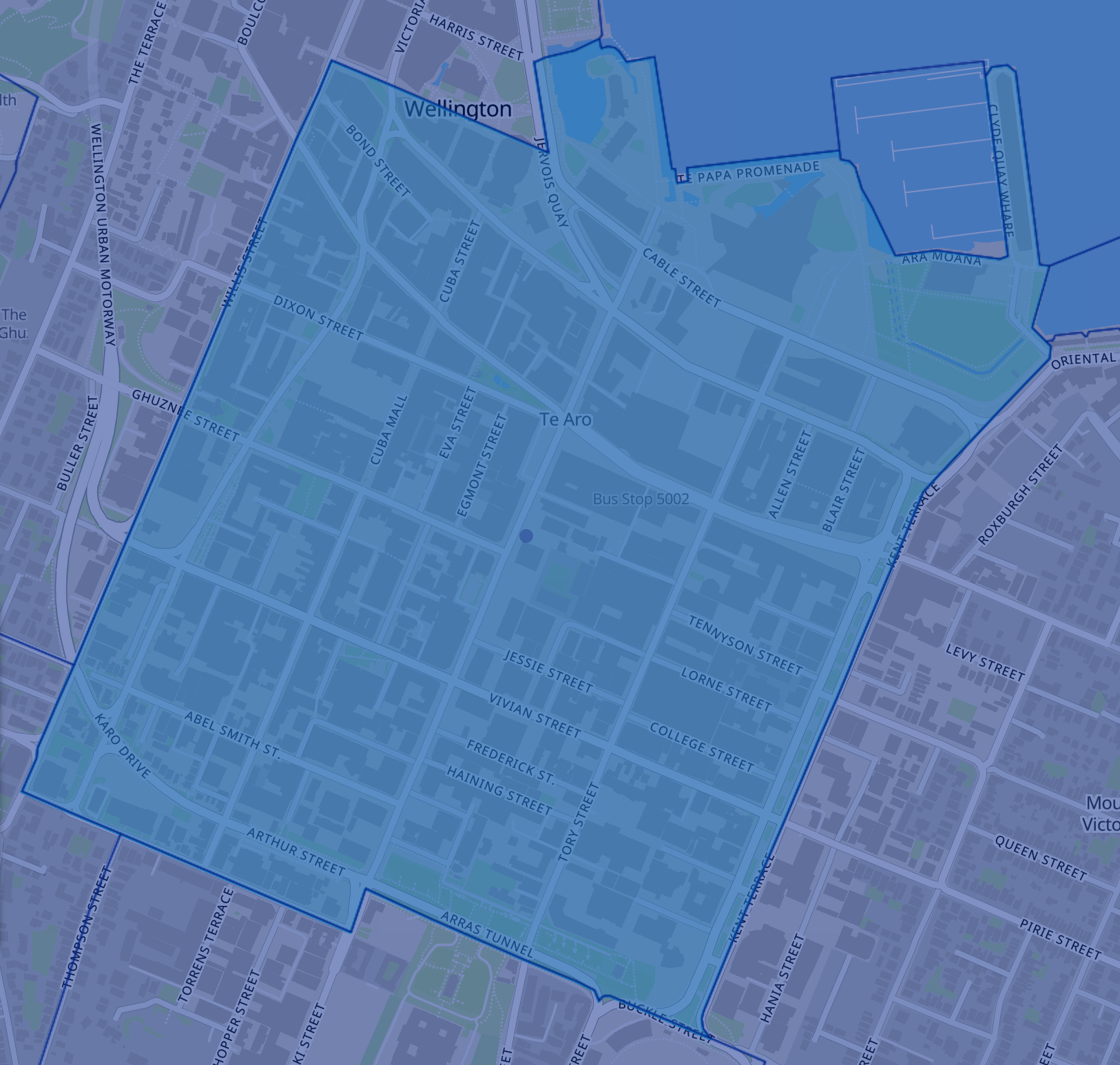

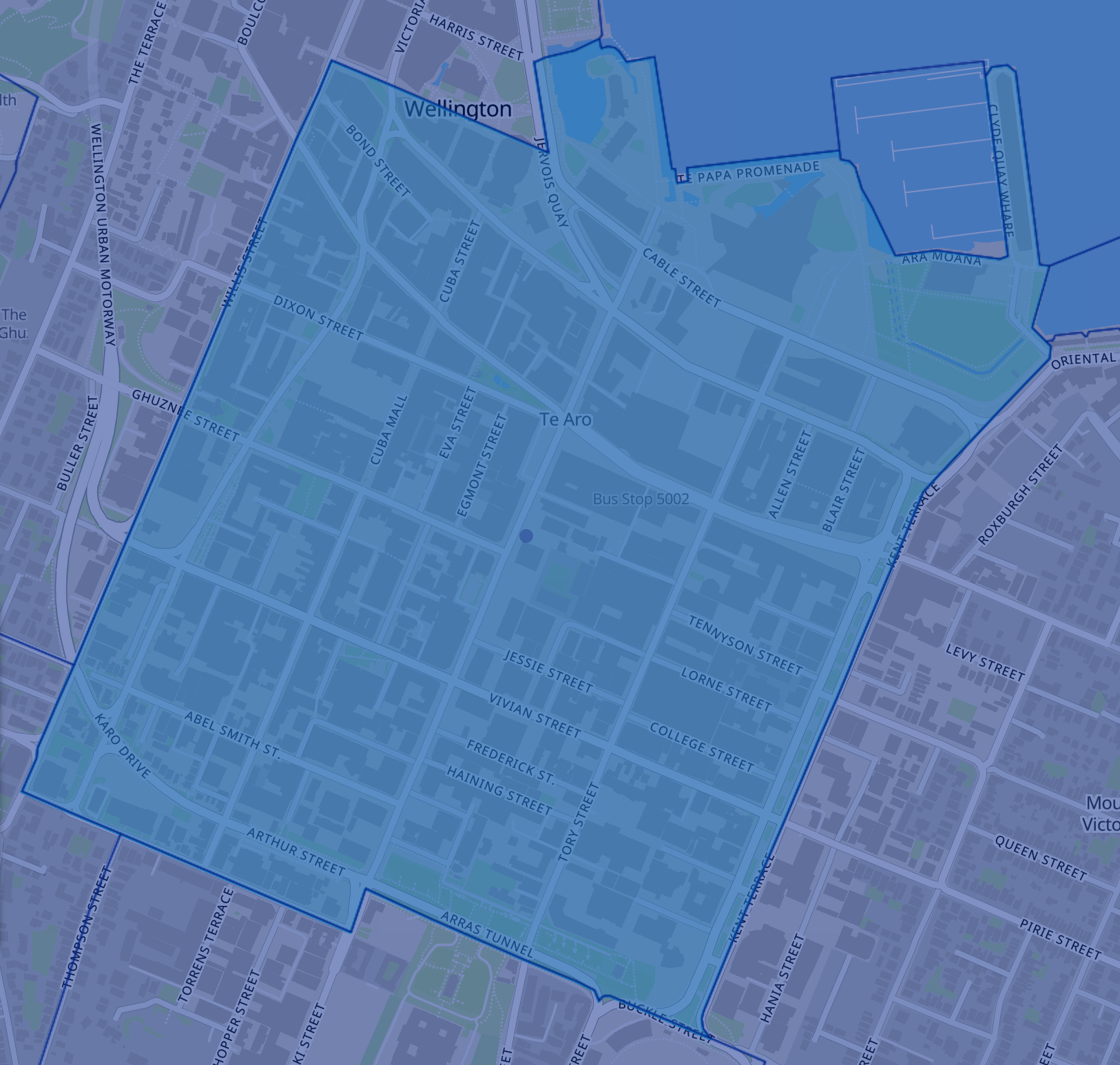

Now, since the story was focused on Courtenay Place and Cuba St, maybe the ‘ten times’ claim was supposed to apply there, rather than to ‘the capital’ as a whole and we should narrow down the geographic focus. The Census Area Unit covering Courtenay Place and Cuba St is “573101 Willis St – Cambridge Terrace”

In that area, there were 1858 ‘acts intended to cause injury’ over five years that happened in public, in an area with a population of 9230. On a per capita population basis that’s 10.8 times the rate for NZ as a whole, suggesting something like this is the analysis being reported.

But why are we dividing by the resident population of Te Aro? Most of the people out partying there don’t live there — they live all over the city and the Wellington region — but they, not the residents, are the relevant denominator. In fact, a lot of assaults of residents will not be in the statistics, because they will happen in someone’s home. At the other extreme, you could leave out population entirely and compare the 1858 assaults in the area unit to the average of 45 for census area units all over NZ — a factor of 40. That also doesn’t answer any really useful question.

There seem to be two implied statistical questions that are more relevant to the news stories:

- Is going out to Te Aro substantially more dangerous than going to bars and nightclubs elsewhere in the country, on an individual party-goer basis?

- Would cracking down on the Courtenay Place/Cuba precinct reduce assaults?

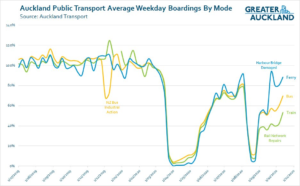

Neither question can be answered with administrative data. For the first question you’d need data on number of visitors to the area on, say, Friday and Saturday nights and to other areas in the Wellington region and around the country. For Wellington City it might be feasible to estimate this from parking, rideshare, and public transport usage, or from cellphone densities, but it would be harder to get data for smaller centres.

The second question depends on the alternative. It’s pretty clear that if we banned going out to bars and nightclubs the reported assault rate would fall — we tried that, in April/May, and had about 25% fewer cases nationwide than in 2019 or 2018, and about 50% in the Courtenay Place/Cuba area. It’s also pretty clear we’re not actually going to tackle assaults with nationwide lockdowns.

If we just cracked down on unlawful behaviour in that area, or reduced the number of places selling alcohol, it’s not clear what would happen. It might be that people drink less and fight less. It might be that they just move the party to some other central area. It might be that they spread out across the city. Or, people might get drunk and fight in the comfort and safety of their own homes and streets — we know that assault and sexual assault in the home are badly under-reported (under-reported to police as well as not being in the public data set).

The right comparison will depend on what individual risk or potential policy change you are trying to evaluate, but it’s not likely to be this one.

* I emailed the NZ Police data address to ask about where the missing assaults were; my request is being actioned pursuant to the Official Information Act**

** Yes, I realise that just answering would count as actioning it pursuant to the Official Information Act, but the email still doesn’t make me expect*** a rapid reply

*** And I need to confess to having completely misjudged the police data people, who got back to me the next morning and were extremely helpful