Trying again

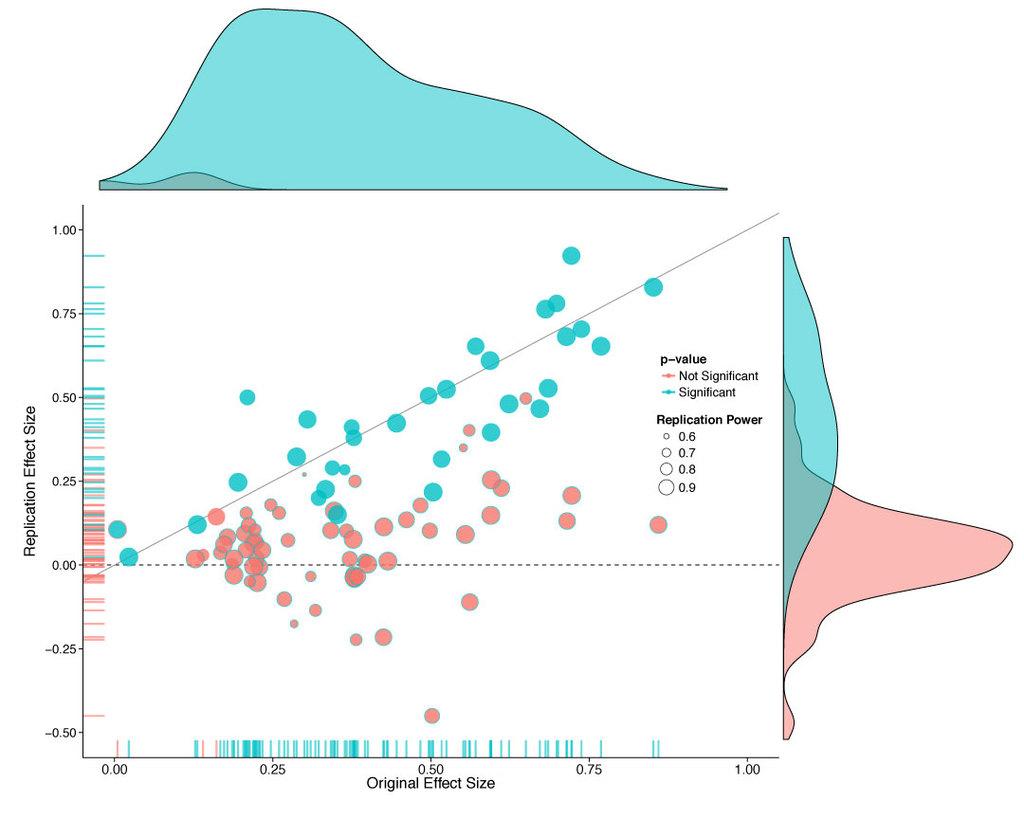

This graph is from the Open Science Framework attempt to replicate 100 interesting results in experimental psychology, led by Brian Nozek and published in Science today.

About a third of the experiments got statistically significant results in the same direction as the originals. Averaging all the experiments together, the effect size was only half that seen originally, but the graph suggests another way to look at it. It seems that about half the replications got basically the same result as the original, up to random variation, and about half the replications found nothing.

Ed Yong has a very good article about the project in The Atlantic. He says it’s worse than psychologists expected (but at least now they know). It’s actually better than I would have expected — I would have guessed that the replicated effects would average quite a bit smaller than the originals.

The same thing is going to be true for a lot of small-scale experiments in other fields.

Thomas Lumley (@tslumley) is Professor of Biostatistics at the University of Auckland. His research interests include semiparametric models, survey sampling, statistical computing, foundations of statistics, and whatever methodological problems his medical collaborators come up with. He also blogs at Biased and Inefficient See all posts by Thomas Lumley »