If it seems too good to be true

This one is originally from the Telegraph, but it’s one where you might expect the local editors to exercise a little caution in reposting it

A test that can predict with 100 per cent accuracy whether someone will develop cancer up to 13 years in the future has been devised by scientists.

It’s very unlikely that the accuracy could be 100%. Even it is was, it’s very unlikely that the scientists could know it was 100% accurate by the time they first published results.

One doesn’t need to go as far as the open-access research paper to confirm one’s suspicions. The press release from Northwestern University doesn’t have anything like the 100% claim in it; there are no accuracy claims made at all.

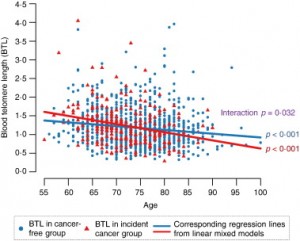

If you do go to the research paper, just looking at the pictures helps. In this graph (figure 1), the red dots are people who ended up with a cancer diagnosis; the blue dots are those who didn’t. There’s a difference between the two groups, but nothing like the complete separation you’d see with 100% accuracy.

Reading the Discussion section, where the researchers tend to be at least somewhat honest about limitations of their research

Our study participants were all male and mostly Caucasian, thus studies of females and non-Caucasians are warranted to confirm our findings more broadly. Our sample size limited our ability to analyze specific cancer subtypes other than prostate cancer. Thus, caution should be exercised in interpreting our results as different cancer subtypes have different biological mechanisms, and our low sample size increases the possibility of our findings being due to random chance and/or our measures of association being artificially high.

Often, exaggerated claims in the media can be traced to press releases or to comments by researchers. In this case it’s hard to see the scientists being at fault; it looks as if it’s the Telegraph that has come up with the “100% accuracy” claim and the consequent fears for the future of the insurance industry.

(Thanks to Mark Hanna for pointing this one out on Twitter)

Thomas Lumley (@tslumley) is Professor of Biostatistics at the University of Auckland. His research interests include semiparametric models, survey sampling, statistical computing, foundations of statistics, and whatever methodological problems his medical collaborators come up with. He also blogs at Biased and Inefficient See all posts by Thomas Lumley »

Thanks for posting this. It had all the alarm bells ringing since there has never been, to my knowledge, a diagnostic test with 100% specificity and 100% sensitivity.

10 years ago

Surely there is almost no difference between the cancer and non-cancer groups here? As a test for future development of cancer it looks from this evidence as if it would be almost entirely useless. Is the claim based on the “significant” interaction? (haven’t read the paper – couldn’t access for some reason).

10 years ago

The claim seems to have been made up by the Telegraph. The paper makes no such claims; they report the interaction and an explanation for why previous results had been inconsistent.

10 years ago

I emailed them about the inaccurate claim. This morning I received an email indicating that, essentially, I was misunderstanding the article and claim and that:

“There is therefore no significant inaccuracy that would engage the Editors’ Code, but we have nevertheless slightly amended the article to clarify the point as a gesture of goodwill. I trust this is satisfactory.”

So it’s been changed, but they’re pretending the 100% claim wasn’t completely fabricated or misinterpreted from something in the first place.

10 years ago