Stat of the Week Competition Discussion: May 5-11 2012

If you’d like to comment on or debate any of this week’s Stat of the Week nominations, please do so below!

If you’d like to comment on or debate any of this week’s Stat of the Week nominations, please do so below!

With the PBRF research assessments happening this year, there is sure to be another round next year of universities using the results in creative ways to make themselves look good. When you have a large number of variables to take into account, it’s easy to come up with apparently-reasonable weightings that make one institution look better than another.

A dramatic example of this is ratings of US law schools. The most widely-known rankings are from US News, and there’s another popular set from Brian Leiter, a professor at University of Texas, which aims to focus only on quality of education. A third set is published by the slightly unusual Thomas J. Cooley School of Law. There is definitely correlation in the top rankings: for example, Harvard tops the Cooley rankings and is second for the other two. The graph below (click to embiggen) shows the three rankings and the lower quartile of GPA and LSAT results for some top colleges (I got the data from here, and added the Cooley rankings by hand)

You were wondering perhaps about the two colored dots?

The orange dot is University of Texas, Prof. Leiter’s employer. His ranking for his own school is between the other two rankings. The red dot is Thomas J. Cooley law school. The US News rankings don’t include them in the top 100 (they don’t quantify past the first 100), but their own ranking makes them second in the US, behind only Harvard.

The Herald has a story claiming that a set of chemicals that have been proposed as an antibacterial additive for meat actually have large effects on sex-hormone levels. Usually this would be a story about the need to ban the chemicals immediately, but this time the headline is “‘Viagra effect’ from pomegranate juice”, and they’re in favour of it.

Of course, the ‘Viagra effect’ label is completely bogus (the quote marks suggest that it comes from the researchers’ press release, which would be very dodgy if true). The researchers claim to have found an increase in testosterone levels in men and women who drank a daily glass of pomegranate juice. Viagra is involved in blood vessel dilation; it has nothing to do with testosterone, and a previous suggestion that pomegranate juice might really have Viagra-like effects has been tested and rejected.

So, what about the testosterone effects? Well, what we have is a story based on a press release about a small, unpublished, uncontrolled, open-label study. The most positive one could possibly be about this is “It will be worth waiting for the real publication” or, perhaps, “I hope it’s not true, because messing with steroid hormones like that is scary”. It’s reassuring to note that last year the same group said pomegranate juice reduced office stress. In 2009, they said it reduced blood pressure and cortisol levels. You will notice that the last link is a press release from a manufacture of pomegranate juice, who sponsored all these studies. I haven’t linked to actual publications, because according to the PubMed database neither of these results has yet been published either.

There’s a lot of this around: a different pomegranate manufacturer has been sued by the US Federal Trade Commission (in 2010). The Wall Street Journal wrote

The FTC’s target is not POM’s generally worded, eye-catching ads with lines such as “Cheat Death,” said an agency official. That ad showed a bottle of pomegranate juice with a rope around its neck.

Instead, the government complaint is directed at more specific health claims.In addition to talking about arterial plaque and blood flow, some POM ads describe company-funded clinical trials that, according to the company, show POM juice products can slow the progression of prostate cancer by lowering the level of antigens in the body called PSAs.

The agency’s complaint says that some of POM’s studies did not show heart disease benefits and that the prostate cancer study wasn’t conducted in a standard, scientifically rigorous manner.

The findings about pomegranate juice could be true, but it’s clear that the target isn’t people who actually care whether they are true.

Along similar lines to our Stats Crimes, Flowing Data has posted a list of 5 common statistical fallacies and examples:

Stuff has a story on a new genetic finding: the cause of (naturally) blond hair in Melanesians, which turns out to be different from the cause in Europeans. You can read the full paper at Science (annoying free registration)

The researchers looked at DNA samples from 43 blond and 42 dark-haired Solomon Islanders. First, they looked at a grid of DNA markers that are relatively easy and cheap to measure. This pointed out a region of the genome that differed between the blond and dark-haired groups. The region contained just one gene, so they were then able to determine the complete genetic sequence of the gene in 12 people from each group. This led to a single genetic change that was a plausible candidate for causing the difference, which the team then measured in all 85 study participants, confirming that it did determine hair color.

The result is interesting, but not all that useful. The gene responsible was already known to be involved in hair and skin color, in humans and in other animals, so that didn’t provide anything new, and if you want to find out or change someone’s hair colour, there are easier ways. Still, it’s interesting that blond hair has appeared (at least) twice — most traits with multiple independent origins are more obviously useful, like being able to digest milk as an adult, or being immune to malaria.

This general approach of a wide scan of markers and then follow-up sequencing is being used by other groups, including a consortium I’m involved in. Although it worked well for the hair-color gene, it’s a bit more difficult to get clear results for less dramatic differences in things like blood pressure. Fortunately, we have larger sample sizes to work with, so there’s some chance. We’re hoping that finding the specific genetic mechanisms behind some of the differences will provide leverage for opening up more understanding of how heart disease and aging work. If we’re lucky.

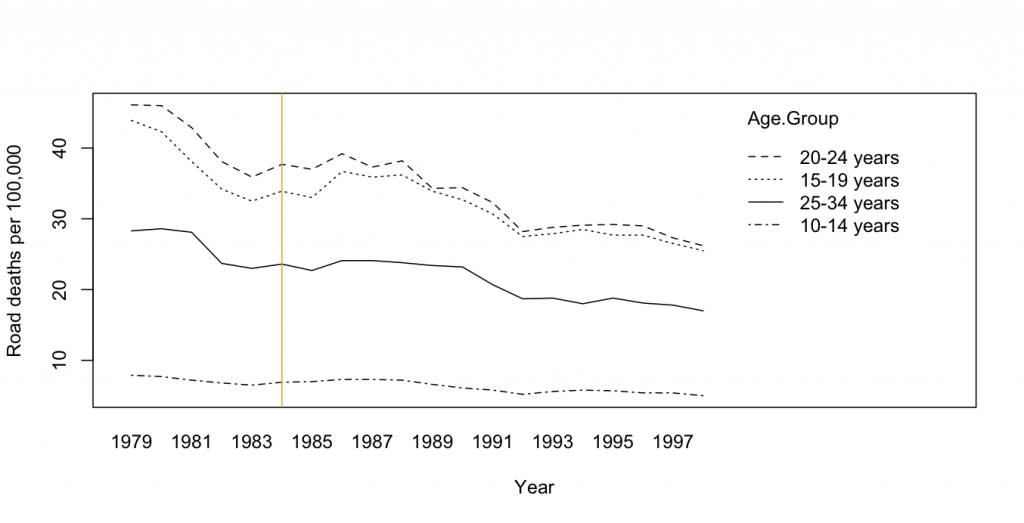

In an earlier post I looked at male youth suicide rates in the US before and after the drinking age was raised in July 1984, and said that expecting a decrease in road deaths made sense. It does make sense, but it seems that it didn’t happen in the US. The graph shows road deaths per 100,000 people by age group (from CDC), and there isn’t anything prominent that happens in 1984 or 1985. The pattern is pretty much the same for ages 15-19, 20-24, and 25-34. The younger two groups would have been affected by the law (with its supporters usually arguing that the youngest of the groups is the real target) and the oldest group would not have been affected. You can think of all sorts of explanations for why a difference might not have been seen (for example, the US is bad at detecting and deterring drunk drivers), but the data has to be disappointing to people who want a change in the drinking age.

It’s interesting that there hasn’t been any real discussion in the NZ media of what happened when the US raised the drinking age.

The Herald has a story about premature birth rates across the world, which I will hijack to write about an important NZ scientific contribution, and a revolution in medical statistics.

One of the basic treatments for premature babies, which unfortunately is not available everywhere, is a simple $1 shot of corticosteroids. This treatment was discovered accidentally by the great NZ medical researcher Graham Liggins. Liggins was interested in what triggered preterm birth, and hypothesised that steroids were involved. In experiments on sheep he didn’t find any effect on prematurity, but noticed huge differences in lung development in premature lambs given steroids. A clinical trial in people found a dramatic reduction in deaths, but no-one really paid attention. After rejection from the Lancet, the trial results were published in another journal in 1972.

Over the following 20 years several additional trials were conducted, most of them too small to show a convincing effect. Finally all the evidence was put together and obstetricians accepted that corticosteroids had an almost magical effect on lung development in premature babies. The results of all the trials and the summary of their collective evidence forms the logo of the Cochrane Collaboration, an organisation dedicated to ensuring that randomized trial data doesn’t get lost, but ends up being incorporated in medical knowledge.

This September, the annual meeting of the Cochrane Collaboration is being held in Auckland, so if you see nerdy medical types roaming the streets, be nice to them. They can be useful.

A record low in road deaths last month has been accompanied by an unusually good Herald story. Andy Knackstedt from the Transport Agency is quoted as saying

“It’s too early to say what may or may not be responsible for the lower deaths over the course of one month.

“But we do know that over the long term, people are driving at speeds that are more appropriate to the conditions, that they’re looking to buy themselves and their families safer vehicles, that the engineers who design the roads are certainly making a big effort to make roads and roadsides more forgiving so if a crash does take place it doesn’t necessarily cost someone their life.”

That’s a good summary. Luck certainly plays a role in the month-to-month variation, and these tend to be over-interpreted, but the recent trends in road deaths are real — much stronger than could result from random variation. We don’t know how much the state of the economy makes a difference, or more-careful driving following the rule changes, or many other possible explanations.

[Update: when I wrote this, I didn’t realise it was the top front-page story in the print edition, which is definitely going beyond the limits of the data]

Here are the team ratings prior to Round 9, along with the ratings at the start of the season. I have created a brief description of the method I use for predicting rugby games. Go to my Department home page to see this.

| Current Rating | Rating at Season Start | Difference | |

|---|---|---|---|

| Storm | 9.64 | 4.63 | 5.00 |

| Broncos | 7.44 | 5.57 | 1.90 |

| Sea Eagles | 4.66 | 9.83 | -5.20 |

| Dragons | 2.78 | 4.36 | -1.60 |

| Knights | 1.79 | 0.77 | 1.00 |

| Wests Tigers | 1.64 | 4.52 | -2.90 |

| Cowboys | 1.41 | -1.32 | 2.70 |

| Warriors | 1.25 | 5.28 | -4.00 |

| Bulldogs | 1.22 | -1.86 | 3.10 |

| Rabbitohs | -0.37 | 0.04 | -0.40 |

| Sharks | -1.12 | -7.97 | 6.90 |

| Roosters | -4.71 | 0.25 | -5.00 |

| Panthers | -6.40 | -3.40 | -3.00 |

| Raiders | -6.62 | -8.40 | 1.80 |

| Eels | -7.54 | -4.23 | -3.30 |

| Titans | -8.80 | -11.80 | 3.00 |

So far there have been 64 matches played, 36 of which were correctly predicted, a success rate of 56.25%.

Here are the predictions for last week’s games.

| Game | Date | Score | Prediction | Correct | |

|---|---|---|---|---|---|

| 1 | Dragons vs. Roosters | Apr 25 | 28 – 24 | 13.52 | TRUE |

| 2 | Storm vs. Warriors | Apr 25 | 32 – 14 | 11.92 | TRUE |

| 3 | Bulldogs vs. Sea Eagles | Apr 27 | 10 – 12 | 1.64 | FALSE |

| 4 | Broncos vs. Titans | Apr 27 | 26 – 6 | 20.88 | TRUE |

| 5 | Rabbitohs vs. Cowboys | Apr 28 | 20 – 16 | 2.47 | TRUE |

| 6 | Raiders vs. Sharks | Apr 29 | 22 – 44 | 3.00 | FALSE |

| 7 | Eels vs. Wests Tigers | Apr 29 | 30 – 31 | -5.38 | TRUE |

| 8 | Knights vs. Panthers | Apr 30 | 34 – 14 | 11.30 | TRUE |

Here are the predictions for Round 9.

| Game | Date | Winner | Prediction | |

|---|---|---|---|---|

| 1 | Eels vs. Bulldogs | May 04 | Bulldogs | -4.30 |

| 2 | Cowboys vs. Dragons | May 04 | Cowboys | 3.10 |

| 3 | Warriors vs. Broncos | May 05 | Broncos | -1.70 |

| 4 | Titans vs. Wests Tigers | May 05 | Wests Tigers | -5.90 |

| 5 | Panthers vs. Storm | May 05 | Storm | -11.50 |

| 6 | Sea Eagles vs. Raiders | May 06 | Sea Eagles | 15.80 |

| 7 | Roosters vs. Knights | May 06 | Knights | -2.00 |

| 8 | Rabbitohs vs. Sharks | May 07 | Rabbitohs | 5.20 |

Here are the team ratings prior to Week 11, along with the ratings at the start of the season. I have created a brief description of the method I use for predicting rugby games. Go to my Department home page to see this.

| Current Rating | Rating at Season Start | Difference | |

|---|---|---|---|

| Crusaders | 9.36 | 10.46 | -1.10 |

| Stormers | 7.60 | 6.59 | 1.00 |

| Bulls | 7.19 | 4.16 | 3.00 |

| Chiefs | 4.07 | -1.17 | 5.20 |

| Waratahs | 0.77 | 4.98 | -4.20 |

| Sharks | -0.03 | 0.87 | -0.90 |

| Reds | -1.68 | 5.03 | -6.70 |

| Highlanders | -1.95 | -5.69 | 3.70 |

| Hurricanes | -2.16 | -1.90 | -0.30 |

| Brumbies | -2.16 | -6.66 | 4.50 |

| Blues | -2.23 | 2.87 | -5.10 |

| Cheetahs | -2.56 | -1.46 | -1.10 |

| Force | -4.98 | -4.95 | -0.00 |

| Lions | -10.83 | -10.82 | -0.00 |

| Rebels | -13.72 | -15.64 | 1.90 |

So far there have been 66 matches played, 45 of which were correctly predicted, a success rate of 68.2%.

Here are the predictions for last week’s games.

| Game | Date | Score | Prediction | Correct | |

|---|---|---|---|---|---|

| 1 | Blues vs. Reds | Apr 27 | 11 – 23 | 7.00 | FALSE |

| 2 | Lions vs. Brumbies | Apr 27 | 20 – 34 | -2.30 | TRUE |

| 3 | Chiefs vs. Hurricanes | Apr 28 | 33 – 14 | 9.20 | TRUE |

| 4 | Force vs. Stormers | Apr 28 | 3 – 17 | -7.00 | TRUE |

| 5 | Cheetahs vs. Highlanders | Apr 28 | 33 – 36 | 5.20 | FALSE |

| 6 | Waratahs vs. Crusaders | Apr 29 | 33 – 37 | -4.10 | TRUE |

Here are the predictions for Week 11. The prediction is my estimated points difference with a positive margin being a win to the home team, and a negative margin a win to the away team.

| Game | Date | Winner | Prediction | |

|---|---|---|---|---|

| 1 | Hurricanes vs. Blues | May 04 | Hurricanes | 4.60 |

| 2 | Rebels vs. Bulls | May 04 | Bulls | -16.40 |

| 3 | Chiefs vs. Lions | May 05 | Chiefs | 19.40 |

| 4 | Brumbies vs. Waratahs | May 05 | Brumbies | 1.60 |

| 5 | Sharks vs. Highlanders | May 05 | Sharks | 6.40 |

| 6 | Cheetahs vs. Force | May 05 | Cheetahs | 6.90 |

| 7 | Crusaders vs. Reds | May 06 | Crusaders | 15.50 |